In the earlier article, we saw the key concepts and terms used in the Machine Learning environment. In this article, let us focus on how Microsoft is involved in Machine Learning especially the Data Collection & Management in depth.

Azure Machine Learning in Cloud

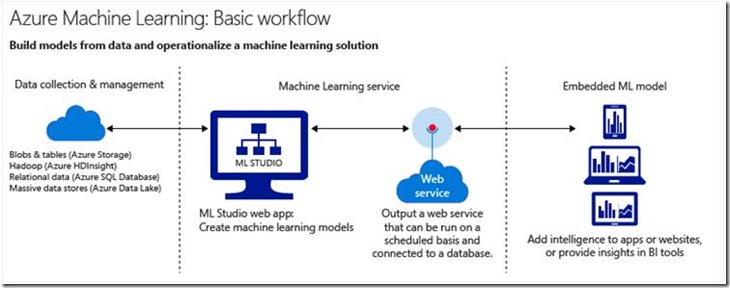

The below diagram (Courtesy: Microsoft) explains about the Basic workflow of Azure Machine Learning.

Azure Machine Learning comprises of three sections.

1. Data collection & Management

a. The data collection can be from any kind of Source, this involves Extraction, Transformation and Loading pattern.

b. Basically, the raw data can be collected from many sources, e.g., even a paper delivery guy will be able to give information like, how many papers were sold on this particular street.

c. After getting the raw information, the data needs to be cleansed and transformed to a meaningful data.

d. After getting the meaningful data, it needs to be stored. It can be either a SQL, Flat file or any sort of DBMS.

e. Azure offers 4 types of storage for the Machine Learning.

i. Blobs & Tables (Azure Storage)

ii. Hadoop (Azure HDInsight)

iii. Relational Data (Azure SQL Database)

iv. Massive data stores (Azure Data Lake)

2. Machine Learning Service

a. This is the Processing sector, where we will be creating our Model and convert the Model as a Service.

b. To Create the Model, we have Azure Machine Learning Studio, which we can see in the upcoming article as part of this series.

3. Embedded ML Model.

a. This is nothing but, consuming the Service from our Client Application. The Client Application can be any application like .Net, SharePoint Sites etc.,

Input Data Management

Now, let us focus on the Data Management. This becomes very important as we saw in the earlier definitions that, the entire Machine Learning Concept revolves around the Past Historical data. If the Historical Data (Input) is not appropriate, then the entire prediction may fail.

To be in detail, if the input data set is a false statement, then the prediction will also be wrong.

The Data can be classified in the below 4 categories.

1. Relevant

2. Connected

3. Accurate

4. Enough to work with. (Adequate)

1. Relevant

a. The data should be relevant.

b. Example:

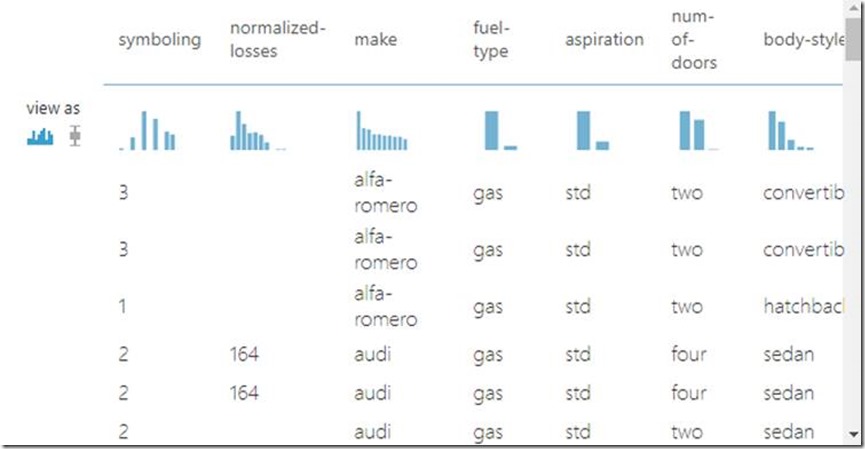

i. Let us take a data set which has all the properties of the Car and the Price. (Let us use this dataset for our entire series).

ii. The properties Of the Car include, Size, Body, Cubic Capacity, Number of Seats etc.,

iii. The Price is the primary property which we are interested on.

iv. With this information, can we predict the price of Petrol? We cannot right. The data is irrelevant to predict the price of the Petrol.

v. The above-mentioned data is relevant to predict the price of the new car which is going to be launched. If we know the properties of the new car, then we can predict the price of the Car.

2. Connected

a. The Dataset which we are taking for the Input should have proper data for all the properties. If any of the properties does not have value, then it will leave the entire regression into deviation.

In the above dataset, if we have a look, the property “normalized-losses” does not have value for all the records. If we use these data set, then definitely the regression will not be accurate.

Either we can fill the property with a default value (which is not recommended as this will not be accurate though) or we can delete the entire record from the input data set itself.

3. Accurate

a. The data should be accurate. We can compare this with a Bulls EYE. The thots should be scattered around the center. Like that, our data should spread around the centre of the decision.

4. Enough Data

a. We should have adequate data to train our Model. We cannot come to a conclusion with one or two records. The minimum amount of data for the data is based on the complexity of the requirement. If it is a complex requirement, then the data should be huge. If it is a straight forward, then it can be a minimal.

b. Usually 75% of the available data will be used for Training and the remaining 25 % to Evaluate the Model.

Happy Coding,

Sathish Nadarajan.

Leave a comment